Table of Contents

Useful as memory overcommit may be for other applications, it is bad news for your PostgreSQL database. With this article I am trying to raise the awareness of this problem. I'll give you hints for the proper kernel configuration and discuss the implications for a containerized setup.

Linux tries to conserve memory resources. When you request a chunk of memory from the kernel, Linux does not immediately reserve that memory for you. All you get is a pointer and the promise that you can use the memory at the destination. The kernel allocates the memory only when you actually use it. That way, if you request 1MB of memory, but use only half of it, the other half is never allocated and is available for other processes (or the kernel page cache).

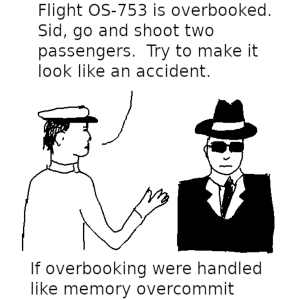

Overbooking is a concept that airlines all over the world have been using for a long time. They sell more seats than are actually in the plane. From experience, airlines know that some passengers don't show up for the flight, so overbooking allows them to make more profit. By default, Linux does the same: it deals out (“commits”) more memory than is actually available in the machine, in the hope that not all processes will use all the memory they allocate. This memory overcommit is great for using resources efficiently, but it has one problem: what if all the flight passengers show up, that is, what if the processes actually use more memory than is available? After all, you cannot offer a computer process a refund or a free overnight hotel room.

If a process tries to use memory that it has allocated, but the kernel cannot provide it, there is only one thing Linux can do: it activates a component called out-of-memory killer that kills a hapless process that uses a lot of memory. Killing processes frees their memory, and Linux now has that memory at its disposal. (Think of that the next time you don't get the flight you booked — it could be worse.)

As explained in my article about PostgreSQL crashes in Docker containers, the postmaster process becomes quite unnerved if something kills one of its child processes: the process could have died in the middle of a critical section and left the shared resources (mostly memory) in an inconsistent state. The only safe way to avoid data corruption is a crash and the subsequent crash recovery.

Crash recovery can take quite a while on a busy database — PostgreSQL has to replay all the WAL written by data modifications since the latest checkpoint. During that time, you cannot connect to the database. Any connection attempt will lead to the error “the database system is in recovery mode”.

You can configure the Linux kernel's behavior with the parameter vm.memory_overcommit. See the Linux kernel documentation for details. The default value 0 means “overcommit within certain (liberal) limits”, 1 means “overcommit without limits”, and 2 (“disable”) is the setting we want. You can change the setting as user root with

|

1 |

sysctl vm.overcommit_memory=2 |

To make the change persistent, edit /etc/sysctl.conf or create a new file in /etc/sysctl.d with the contents

|

1 |

vm.overcommit_memory = 2 |

Activate the setting by running this command as root:

|

1 |

sysctl --system |

When you disable memory overcommit, it is important to make sure that memory is currently not overcommited. Otherwise, your machine might become unavailable. To verify that, you can check the Committed_AS entry in /proc/meminfo: it should be less than the amount of memory Linux will be ready to commit to processes once you disable memory overcommit.

Without memory overcommit, Linux will return the error “out of memory” (ENOMEM) if a process tries to allocate more memory than the kernel is ready to deal out. If a PostgreSQL process receives this error, the running statement fails with the error code 53200, but the database as a whole remains operational.

If you disable memory overcommit, it is important to also configure how much memory Linux gives out to processes. By default, the Linux parameters vm.nr_hugepages and vm.overcommit_ratio determine the amount of memory that the kernel will commit. The formula is complicated:

|

1 |

committable memory = swap + (RAM - vm.nr_hugepages * huge page size) * vm.overcommit_ratio / 100 |

RAM and the size of the swap space can be found from the output of the operating system command free.

Now the default value for vm.overcommit_ratio is 50, and modern machines have much more RAM than swap space. That means that you'll get an out-of-memory error way before the system really runs out of RAM. So you should definitely adjust the limit. If you don't want to grapple with the above formula, there is a simpler way to configure the amount of available memory: you can set vm.overcommit_kbytes according to this formula:

|

1 |

vm.overcommit_kbytes = available RAM - vm.nr_hugepages - swap |

If you set vm.overcommit_kbytes, it will override vm.overcommit_ratio. You can set these parameters just like I described for vm.overcommit_memory in the previous section.

If you disable memory overcommit, you can keep the worst from happening. But you also don't want out-of-memory errors from your PostgreSQL statements. So how should you configure PostgreSQL to keep it from using more memory than is available? Unfortunately, the answer is not simple. What is easy to estimate is the amount of shared memory that PostgreSQL will use. The lion's share will be shared buffers. The size of this cache is determined by the PostgreSQL parameter shared_buffers. PostgreSQL allocates this memory when the server starts and never resizes it. To determine the overall requirement for shared memory of a PostgreSQL cluster, you can look at the PostgreSQL parameter shared_memory_size. So this part is fairly easy.

What is hard to estimate is the amount of private memory that the PostgreSQL processes will use. The PostgreSQL parameter that determines private memory usage is work_mem, but that is not a global limit for the whole cluster. Rather, it determines how much memory a single step of an execution plan may use. So a single statement can end up using multiples times work_mem! So how can you size work_mem to avoid going out of memory? As you might expect, there is no simple answer. However, the following coarse rule of thumb is often good enough:

|

1 |

shared_buffers + 2 * work_mem * maximum number of connections ≤ available memory |

Incidentally, this formula emphasizes the importance of a moderately sized connection pool for good performance: the fewer connections you have, the more generous you can be with work_mem, and lots of memory will speed up many non-trivial SQL statements.

Running PostgreSQL inside a container has become a popular option. Containers allow you to manage resources, and with proper orchestration, creating and destroying PostgreSQL clusters can become simple. Each container image has its copy of the software and system libraries, and it is possible to limit the system resources a container can consume. But there is one component that all containers on a host machine share: the operating system kernel. So if you disable memory overcommit in the kernel, that will affect all containers on the host machine.

If you want to run PostgreSQL in containers, you should run the PostgreSQL containers on their own host machine. That way, you can configure the Linux kernel to work well with PostgreSQL. Other software may rely on memory overcommit and may have trouble with kernels where memory overcommit is disabled.

It is essential for the stability of PostgreSQL to disable memory overcommit on the Linux kernel. Don't forget to adjust vm.overcommit_kbytes as appropriate. To avoid going out of memory, set shared_buffers and work_mem conservatively.

With your formula:

there won't be anything left for the page cache in case of memory pressure.

Don't we want to ring-fence a small percentage of RAM for it?

That's right. PostgreSQL has its own page cache (shared buffers), so I think it is more important to have the memory available for SQL statements when needed than for another page cache.

But you can certainly use a smaller setting if you always want to keep some kernel page cache available.

Thank you. I do think you are actually right. PostgreSQL has its own cache. And what's really hot in the kernel page cache won't necessarily be reclaimed by the kernel even if it's marked as reclaimable. However, memory management in Linux is a very complex subject, and I was very interested to hear your opinion on the matter.

The article has formula for configuring work_mem i.e. shared_buffers + 2 * work_mem * maximum number of connections ≤ available memory, why is the second term multiplied by 2? Just currious.

To be more on the safe side. Remember that a single statement can use several times

work_mem.Great article. Why do you still set vm.overcommit_ratio, if memory overcommit is disabled ?

If memory overcommit is enabled,

vm.overcommit_ratiois ignored. It is only used if memory overcommit is disabled.The explanation is very good and most memorable with flight overbooking concept. And about formulas I experienced that these formulas will give you the base settings, monitoring and reconfiguration is much needed.

Why do you subtract swap from total RAM memory when calculating the vm.overcommit_kbytes value? Shouldn't it be total RAM + swap so that we utilize all available resources on the system?

The formula in the kernel adds swap to calculate how much RAM to deal out. So I subtract the value again, because I want to avoid swapping.

In formula: "vm.overcommit_kbytes = available RAM - vm.nr_hugepages - swap". If we do not have vm.nr_hugepages set and we don't want to set it, can we add 0 for it, to get:

"vm.overcommit_kbytes = available RAM - 0 - swap" or is there some other formula in this case?

You got that right, just use 0 then. After all,

vm.nr_hugepagesis 0 on such a system.