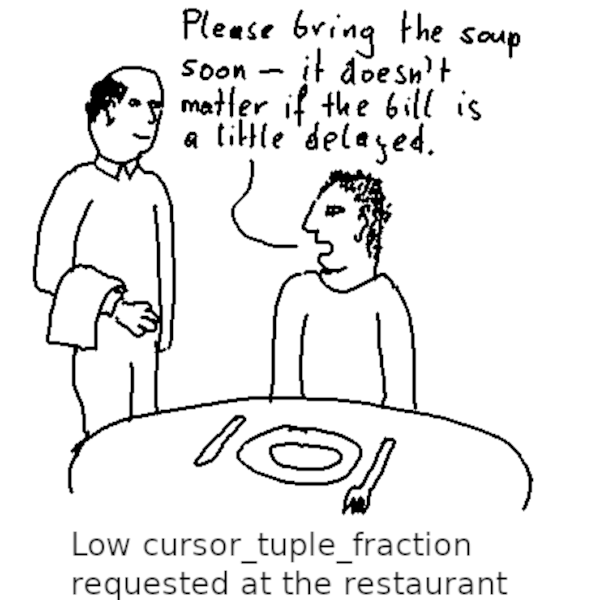

This article is a kind of failure story. I originally set out to write a piece about the PostgreSQL parameter cursor_tuple_fraction, only to discover that my boss has already covered that topic to some extent. Then I thought that I could investigate the parameter’s effect on the JDBC driver. That led me to an embarrassingly long wrestle with auto_explain and an eventual failure to find anything. But the world is littered with success stories, so why not share some failure? And there is the one or other interesting insight in store that might make the article worth your while.

Table of Contents

cursor_tuple_fractionIn a database, cursors are the canonical way of iterating through result sets. Cursors avoid the need to read the complete result set at once, which reduces the memory consumption. In PostgreSQL you can use the non-standard statement DECLARE to explicitly create a cursor, but usually you use client API functions or PL/pgSQL FOR loops that create PostgreSQL cursors “behind the scene”.

Transferring result rows to the client takes some time, and processing on the client side takes even more time. If an application processes a query result in chunks using a cursor, it can take a while until it reaches the end of the result set. Therefore, it is most important to get the first result rows as quickly as possible when you read the result set using a cursor. The total execution time is less important. This is where the PostgreSQL parameter cursor_tuple_fraction comes into play: for queries executed using a cursor, it tells the optimizer to prefer execution plans that produce the first result rows quickly, at the expense of the total query execution time. Hans’ article shows you a good example of what cursor_tuple_fraction does.

Another use case for cursor_tuple_fraction is if you use a cursor, but want to optimize the total execution time of the query. This is usually the case if you declare a cursor WITH HOLD, so that it can outlive a database transaction. The result set of a WITH HOLD cursor is materialized when the transaction commits, so that COMMIT has to wait until the complete result set has been calculated. To keep that time as short as possible, it is a good idea to change cursor_tuple_fraction to 1.0, so that PostgreSQL plans the query for the shortest total execution time.

cursor_tuple_fractionFor what follows, I need a little example that exhibits cursor_tuple_fraction. The following table will suffice:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

CREATE UNLOGGED TABLE large ( id bigint GENERATED ALWAYS AS IDENTITY, val double precision NOT NULL, payload text NOT NULL ); INSERT INTO large (val, payload) SELECT random(), 'some longer string to take up space' FROM generate_series(1, 1000000); -- update the statistics and set the hint bits VACUUM (ANALYZE) large; -- create constraints and indexes after loading data ALTER TABLE large ADD CONSTRAINT large_pkey PRIMARY KEY (id); CREATE INDEX large_val_idx ON large (val); |

I am using an UNLOGGED table to save myself the overhead of writing WAL. After all, we are only interested in query performance.

During normal execution, PostgreSQL uses a bitmap index scan and a sort, which is the fastest way to compute the whole result:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

EXPLAIN (COSTS OFF) SELECT * FROM large WHERE val < 0.01 ORDER BY id; QUERY PLAN ════════════════════════════════════════════════════════════ Sort Sort Key: id -> Bitmap Heap Scan on large Recheck Cond: (val < '0.01'::double precision) -> Bitmap Index Scan on large_val_idx Index Cond: (val < '0.01'::double precision) (6 rows) |

When the query is used in a cursor, PostgreSQL chooses an index scan, which can quickly find the first result rows in the correct order:

|

1 2 3 4 5 6 7 8 9 |

EXPLAIN (COSTS OFF) DECLARE c CURSOR FOR SELECT * FROM large WHERE val < 0.01 ORDER BY id; QUERY PLAN ════════════════════════════════════════════ Index Scan using large_pkey on large Filter: (val < '0.01'::double precision) (2 rows) |

By default, the PostgreSQL JDBC driver reads the complete result set at once. This can cause problems with big result sets. To mitigate the problem, you can use java.sql.PreparedStatement.setFetchSize(int) to make the driver fetch the result set in chunks. In addition, the driver uses server-side prepared statements if a java.sql.PreparedStatement is executed repeatedly. By default that happens from the fifth execution on, but you can change this prepare threshold.

To examine the JDBC driver’s behavior, I wrote this little Java program:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

public class Cursor { public static void main(String[] args) throws ClassNotFoundException, java.sql.SQLException { Class.forName("org.postgresql.Driver"); java.sql.Connection conn = java.sql.DriverManager.getConnection( "jdbc:postgresql://127.0.0.1:5432/test?user=laurenz" ); // cursors only work inside a transaction conn.setAutoCommit(false); java.sql.PreparedStatement stmt = conn.prepareStatement( "SELECT * FROM large WHERE val < 0.01 ORDER BY id" ); // fetch result in chunks of 1000 instead of all at once stmt.setFetchSize(1000); // Server side prepared statements are a PostgreSQL feature. // By default, they would used from the fifth execution on. // We need to get the underlying PostgreSQL object to change // that setting. // A more portable way would be to add "prepareThreshold=1" // as connection string option. org.postgresql.PGStatement pgstmt = stmt.unwrap(org.postgresql.PGStatement.class); // use server side prepared statements from the first execution on pgstmt.setPrepareThreshold(1); java.sql.ResultSet rs = stmt.executeQuery(); // iterate to the result set to read all rows long counter = 0; while (rs.next()) ++counter; System.out.println("Got " + counter + " rows."); rs.close(); stmt.close(); conn.close(); } } |

To examine the execution plan that PostgreSQL is using when running the query from the JDBC driver, the go-to tool is auto_explain. It allows you to capture the execution plan of all statements whose execution times exceeds a certain threshold. I enabled auto_explain with the following parameters in postgresql.conf:

|

1 2 3 4 |

# requires a restart when changed shared_preload_libraries = 'auto_explain' # log all statements auto_explain.log_min_duration = 0 |

I restarted PostgreSQL to activate the changed shared_preload_libraries and ran my sample program. Then I looked into the PostgreSQL log for the execution plan and found — nothing. Other statements (for example, statements that I executed by hand via psql) had their execution plans logged. Only my Java program failed to log an execution plan. It cannot be the fault of the client, because auto_explain operates on the database server, and PostgreSQL definitely executed the statement.

It took me a while to figure out what was going on. If you know PostgreSQL well, you might want to check if you can figure out what the problem was before reading on to the next section.

A PostgreSQL cursor is implemented by a data structure called a portal, which holds the current execution state. The trigger for auto_explain to log an execution plan is the duration of the statement. Consequently, it has to wait until the statement is done — before that, PostgreSQL doesn’t know the execution time. In the case of a portal, execution is done when the portal is closed. I had assumed that the portal gets closed when I close the result set (“rs.close()” in my sample program), but that doesn’t send a close message to the server. Instead, the portal gets closed when the program commits the transaction. Now I had disabled autocommit so that I could use a cursor, but I had forgotten to explicitly commit the transaction, because I only read from the database and my job was done anyway.

In order to get auto_explain to work as it should, I had to add one line:

|

1 2 3 4 5 6 7 8 9 10 |

public class Cursor { public static void main(String[] args) throws ClassNotFoundException, java.sql.SQLException { ... rs.close(); stmt.close(); conn.commit(); conn.close(); } } |

After that, I was ready for my next disappointment.

cursor_tuple_fraction has no effect when using the JDBC driverTo my surprise, the plan used by the JDBC driver was:

|

1 2 3 4 5 6 7 |

Query Text: SELECT * FROM large WHERE val < 0.01 ORDER BY id Sort (cost=11597.46..11622.55 rows=10034 width=52) Sort Key: id -> Bitmap Heap Scan on large (cost=190.19..10930.57 rows=10034 width=52) Recheck Cond: (val < '0.01'::double precision) -> Bitmap Index Scan on large_val_idx (cost=0.00..187.68 rows=10034 width=0) Index Cond: (val < '0.01'::double precision) |

Why does PostgreSQL not use the “fast start-up” plan? If you read my article about parallel query and the JDBC driver, you may suspect why. The JDBC driver does not use the DECLARE statement to create a cursor, but uses another technique provided by the PostgreSQL Frontend/Backend protocol:

The overall execution cycle consists of a parse step, which creates a prepared statement from a textual query string; a bind step, which creates a portal given a prepared statement and values for any needed parameters; and an execute step that runs a portal’s query. In the case of a query that returns rows (

SELECT,SHOW, etc.), the execute step can be told to fetch only a limited number of rows, so that multiple execute steps might be needed to complete the operation.

The JDBC driver implements cursors by repeatedly sending the “execute” message for the same portal with a row count limit. That is a perfectly valid technique, but the PostgreSQL server doesn’t know at query planning time that the result will be fetched in chunks. Consequently, it cannot know that it should plan the query using cursor_tuple_fraction. In other words, the JDBC driver can never benefit from fast start-up plans, unless you use an explicit LIMIT clause.

I ended up spending too much time with no big discovery to share. Still, I learned a couple of things:

cursor_tuple_fraction

Leave a Reply