Nowadays, most Debian packages are maintained in Git repositories, with the majority hosted on Debian's GitLab instance at salsa.debian.org.Debian is running a "vcswatch" service that keeps track of the status of all packaging repositories that have a Vcs-Git (and other VCSes) header set. This status is stored in a PostgreSQL database, which is then utilized by Debian's package maintenance infrastructure for various purposes. One such application is to identify repositories that may require a package upload to release pending changes.

Naturally, this amount of Git data required several expansions of the scratch partition on qa.debian.org in the past, up to 300 GB in the last iteration. Attempts to reduce that size using shallow clones (git clone --depth=50) resulted in only marginal space savings of a few percent. Running git gc on all repos helps somewhat, but is a tedious task. As Debian is growing, so are the repos both in size and number. I ended up blocking all repos with checkouts larger than a gigabyte, and even then, the only solutions were again either expanding the disk space or lowering the blocking threshold.

Since we only require minimal information from the repositories, specifically the content of debian/changelog and a few other files from debian/, along with the number of commits since the last tag on the packaging branch - it made sense to try obtaining this information without fetching a full repo clone. The question of whether we could retrieve this solely using the GitLab API at salsa.debian.org was pondered but never answered. But then, in #1032623, Gábor Németh suggested the use of git clone --filter blob:none. This suggestion remained unattended in the bug report for almost a year until the next "disk full" event prompted me to give it a try.

The blob:none filter makes git clone omit all files, fetching only commit and tree information. Any blob (file content) needed at git run time is transparently fetched from the upstream repository and stored locally. It turned out to be a game-changer. The larger repositories I tried it on shrank to just 1/100 of the original size.

Poking around I figured we could do even better by using tree:0 as filter. This additionally omits all trees from the git clone, once again fetching the information only at run time when needed. I tried it on some of the larger repos, and they shrank to just 1/1000 of their original size.

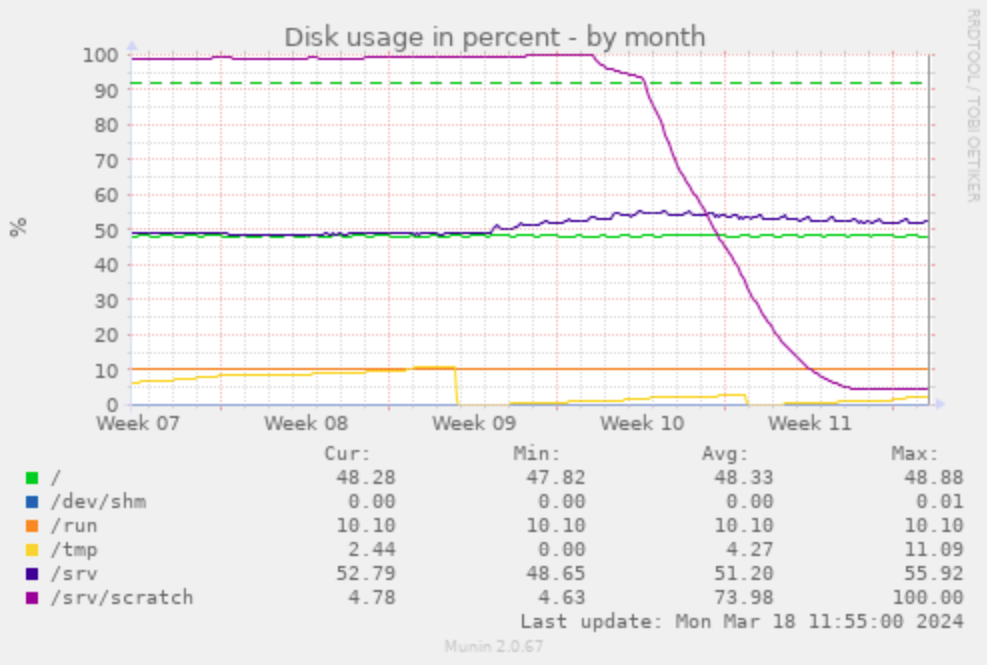

I deployed the new option on qa.debian.org and scheduled all repositories to fetch a new clone on the next scan:

The initial drop from 100% to 95% is my first "what happens if we block repos > 500 MB" attempt. Over the week after that, the git filter clones reduced the overall disk consumption from almost 300 GB to 15 GB, a 1/20 reduction. Some repos shrank from GBs to below a MB.

Perhaps I should make all my git clones use one of these filters.

If you do not want to miss any news in the future, please subscribe to our newsletter.

Leave a Reply