Table of Contents

(Updated 2023-02-22) In my daily work, I see many databases with a bad setting for max_connections. There is little advice out there for setting this parameter correctly, even though it is vital for the health of a database. So I decided to write up something.

max_connections?According to the documentation, max_connections determines the maximum number of concurrent connections to the database server. But don't forget that superuser_reserved_connections of these connections are for superusers only (so that superusers can connect even if all other connection slots are blocked). With the default setting of 100 and 3 for these parameters, there are 97 connections open for application purposes.

Since PostgreSQL reserves shared memory for all possible connections at server start, you have to restart PostgreSQL after changing max_connections.

max_connections?There are several reasons:

max_connections, people want to be “on the safe side”.ERROR: remaining connection slots are reserved for non-replication superuser connectionsIt seems like an easy enough solution to increase the number of connections.

max_connections?Setting max_connections to a high value can have severe consequences:

As long as all but three of your 500 database sessions are idle, not much harm is done. Perhaps taking the snapshot at the beginning of each query is a little slower, but you probably won't notice that.

But there is nothing that can prevent 100 of the connections from becoming active at the same time. Perhaps a particular event occurred (everybody wants to buy a ticket the minute after sales started). Perhaps a rogue statement kept a lock too long and processes ready to run “piled up” behind the lock.

If that happens, your CPU and/or I/O subsystem will be overloaded. The CPU will be busy switching between the processes or waiting for I/O from the storage subsystem, and none of your database sessions will make much progress. The whole system can “melt down” and become unresponsive, so that all you can do is reboot.

Obviously, you don't want to get into that situation, and it is not going to improve application performance either.

There is a limited amount of RAM in your machine. If you allow more connections, you can allot less RAM to each connection. Otherwise, you run the danger of running out of memory. The private memory available for each operation during query execution is limited by work_mem. So you will have to set this parameter low if you use a high value for max_connections.

Now work_mem has a direct influence on query performance: sorting will be faster if it can use enough RAM, or PostgreSQL may prefer a faster hash join or hash aggregate and avoid a sort at all.

So setting max_connections high will make queries perform slower than they could, unless you want to risk running out of memory.

max_connections always bad?If you read the previous section carefully, you will see that it is not actually max_connections that is the problem. Rather, you want a limit on the actual number of connections. max_connections is just an upper limit for that number. While it is important not to have too many client connections, it is also a bad idea to enforce that limit with max_connections, because exceeding that limit will cause application errors.

We will see below that a connection pool is the best way to enforce a limit on the number of active sessions. Then there is no danger of too many connections, and there is no need to set max_connections particularly low. On the contrary: if your application is set up for high availability and fails over to a different application server, it can take a while for the old client connections to time out on the server. Then it can be important to set max_connections high enough to accommodate the double number of client connections for a while.

You want to utilize your resources without overloading the machine. So your setting should satisfy

|

1 2 |

connection limit < min(num_cores, parallel_io_limit) / (session_busy_ratio * avg_parallelism) |

num_cores is the number of cores availableparallel_io_limit is the number of concurrent I/O requests your storage subsystem can handlesession_busy_ratio is the fraction of time that the connection is active executing a statement in the databaseavg_parallelism is the average number of backend processes working on a single query.The first two numbers are easy to obtain, and you usually have a good idea if you will use a lot of parallel query or not. But session_busy_ratio can be tricky to estimate. If your workload consists of big analytical queries, session_busy_ratio can be up to 1, and avg_parallelism will be one more than max_parallel_workers_per_gather. If your workload consists of many short statements, session_busy_ratio can be close to 0, and avg_parallelism is 1.

Often you will have to use a load test to determine the best value of max_connections by experiment. The aim is to keep the database busy, but not overloaded.

For some workloads, this formula or an experiment would lead to a high setting for max_connections. But you still shouldn't do that, as stated above: the more connections you have, the bigger the danger of many connections suddenly becoming active and overloading the machine. The better solution in this case is to use a connection pool to increase session_busy_ratio.

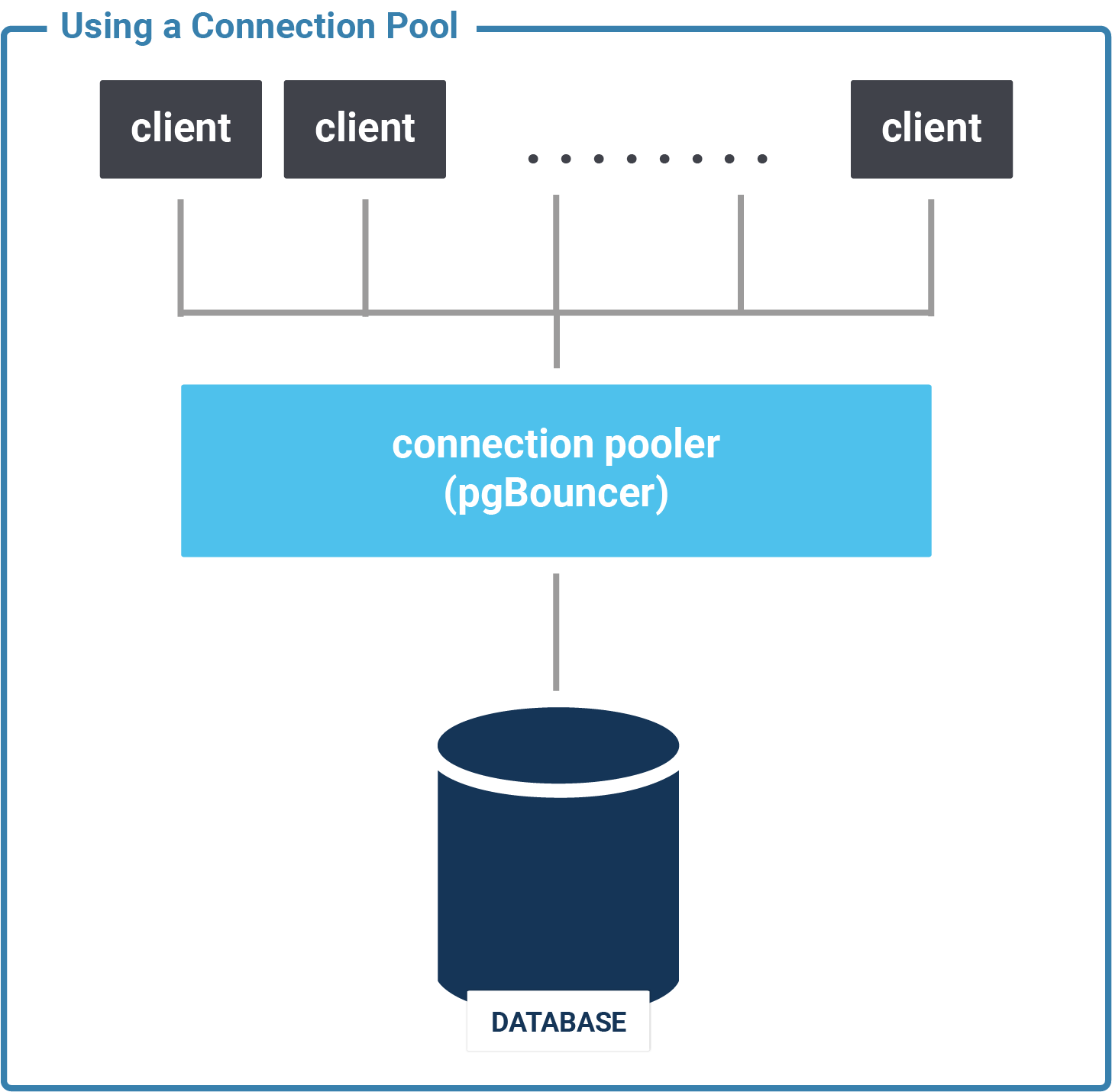

A connection pool is a piece of software that keeps a number of persistent database connections open. It uses these connections to handle database requests from the front-end. There are two kinds of connection pools:

Connection pools provide an artificial bottleneck by limiting the number of active database sessions. Using them increases the session_busy_ratio. This way, you can get by with a much lower setting for max_connections without unduly limiting your application.

It is important for the health and performance of your application not to have too many open database connections.

If you need to be able to handle many database connections due to architecture limitations (the application server has no connection pool, or there are too many application servers), use a connection pooler like pgBouncer. Then you can give each connection the resources it needs for good performance.

If you want to know how to best size your connection pool, you could read my article on that topic.

Hello Lorenz based on your experience in PostgreSQL some extension or wrapper that allows me to insert information from PostgreSQL tables directly to other tables in a SyBase server ?

you will need the tds_fdw in this case. MS SQL works for sure ... Sybase should work (it uses the same protocol) but we have not tested in a while.

Hello Lorenz,

I think my app lauch a pool of 50 connections by database.

And my app is multitenant and have 1 database by tenant.

Can I check the number of connection with netstat command?

To count incoming TCP connections on port 5432, you could

netstat -n | grep ESTABLISHED | grep 'b5432b'But it is easier to query

pg_stat_activity.Thank you for the article, it's great as always! It would be very helpful to add an example calculation for instance for 16 or 32 cores SKU, for OLTP or OLAP. That would help imagine the problem for the readers

Thanks for the feedback.

Do you really think that pulling numbers out of thin air and calculating the result would be useful?

It is not like the formula is complicated...

PGBouncer does not support posgresql prepared statements, nor do I see how it ever could. So this solution is worthless.

I'll treat this as a serious response rather than trolling.

Prepared statements, like temporary tables or

WITH HOLDcursors, live longer than a single transaction and consequently can be problematic with statement or transaction pooling mode in pgBouncer. There is no problem whatsoever in session pooling mode!A good workaround in transaction pooling mode would be to use a PL/pgSQL function instead of a prepared statement: execution plans of SQL statements in such functions get cached just like the ones of prepared statements.

Thanks for this article, very useful! Could you explain what the operator "," in "max(num_cores, parallel_io_limit) " means in your formula?

This is not the SQL aggregate function

max()(which takes only a single argument). What I mean is that you should take the maximum, that is the larger, of these two values.I have some question if you can suggest some possible solutions

1) We have PostgreSQL DB for sonarqube which we tried upgrading. Upgrading from 10.23 to 11.20 was smooth and we see the the DB is available but as the DB is connected to sonarqube we see high CPU utilization and high number of connections after which we 502/504 for sonarQube and the instance we used are the t3.micro and t3.small . Any suggestion to resolve this issue.

2) We had to use the backup to revert back older version of DB but we see that there is a AWS scheduled maintenance required under maintenance & backup which happens next this week sept 17, 2023. We have this issue last week and DB got upgraded and sonarservice is down. Is there a way to skip the AWS scheduled maintenance?

This has no connection to my article. Get a technical consultant.

I get the error which is mentioned in your article ERROR: remaining connection slots are reserved for non-replication superuser connections.

The max_connection threshold is reached and i get 502/504 error for sonarQube UI.

Thank you

That's just a symptom. You seem to have no working connection pool in place, and the worse performance on your new system overloads your database.

You'll have to get a working connection pool, but your key task is to find out what the cause of the bad performance is. This requires deeper analysis of your workload, something I certainly cannot provide in a comment.

As I said, get a knowledgeable PostgreSQL database person on board.

Hello. We have a web application in docker swarm witch is used by ~400-500 users. Our PostgresDB is located on a separate server. Server - 12 Cores (2 thread per core) 64 GB RAM + ssd. Now max_conn = 1000, but when I check pg_stat_activity, there is always < 120. Mb did we misconfigure the database?