Container technologies have revolutionized the way we develop and deploy software. They offer flexibility, scalability, and efficiency that are essential in today's increasingly complex IT landscape. In the coming weeks, we will take you on a journey into the world of PostgreSQL in containers. We will discuss both Docker and Kubernetes with corresponding examples. In this initial post, we will explain the advantages of containers over VMs and why you should get more involved with containers.

Table of Contents

Containers are essentially isolated environments that contain all the necessary software components to run a specific application or function. However, compared to virtual machines, containers are considerably leaner. They share the host's operating system instead of requiring their own, which makes them resource-efficient and fast. This lightness, combined with the microservice approach where each container unit fulfills a specific function, enables efficient and flexible scaling. For example, a web shop could consist of nginx containers, a database container (or more for High Availability) and a connector for the CRM system. Like physical containers on a ship, software containers can be easily moved, replicated and scaled, making them an ideal solution for dynamic and growth-oriented projects.

Containers offer the following benefits:

Containers have the following drawbacks:

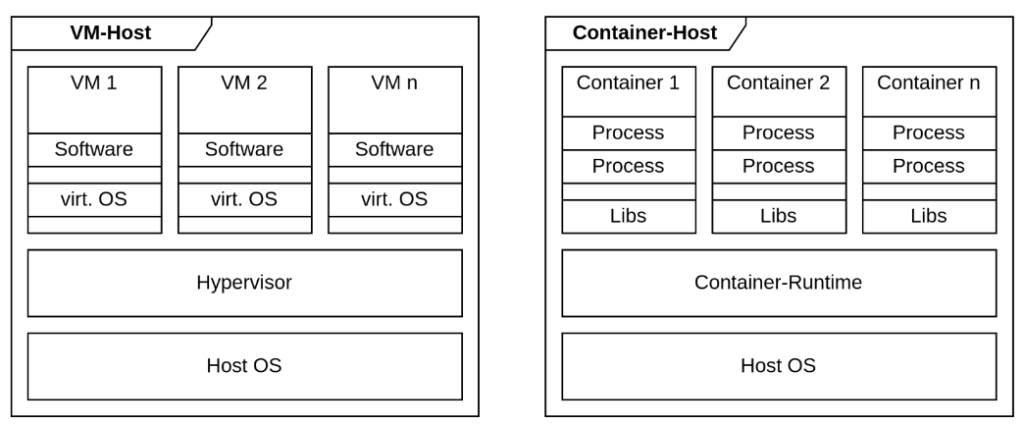

One of the biggest differences between VMs and containers lies in the type of virtualisation. As is well known, a VM virtualizes a physical machine and therefore operates at the hardware level. This means that a VM contains a complete OS and requires a hypervisor on the host to run multiple VMs independently.

A container places the virtualisation on top of the operating system. Containers therefore share the kernel of the host. The applications are isolated on the basis of Linux namespaces and Cgroups.

By using the shared kernel and dispensing with a separate OS, containers have a lower overhead than VMs, but at the same time they provide weaker isolation.

As containers have weaker isolation than VMs, it is important to shed light on this topic. As already mentioned, containers share the system kernel. Isolation takes place on the Linux namespaces and cgroups. However, this means that vulnerable kernels or container runtimes can enable attacks on the running containers. Conversely, this also means that a successful breakout from a container can have serious consequences for the host and the other containers.

There are two particularly well-known container engines. Docker on the one hand and Podman on the other. The engine handles deploying and managing the containers, providing everything necessary for the user to interact with the system. The containers are then executed in the container runtime. The runtime takes care of the container processes and their isolation.

A major difference between Docker and Podman can be seen in the debate about processes that run as root. While Docker or the Docker daemon runs as root, which can have devastating effects, especially if an attacker breaks out of the container, Podman does without a daemon and runs containers directly in the user context. A potential attacker who breaks out of the container, therefore, does not have root rights per se.

Enough talk, let's put our words into action and build our first, fairly simple container. To do this, we need Docker and a Dockerfile, which contains all the instructions for building a container image. We can start with either a completely empty image or use a base image. The advantage of a base image is that it allows us to integrate an already familiar environment into the container. Our example is based on Rocky Linux 9, i.e. we carry out all further steps in the Dockerfile in the same way as we would in Rocky Linux.

Firstly, we create a small bash script that we want to start later in the container.

|

1 2 |

#!/bin/bash echo "Hello World" |

Now we create the following Dockerfile

|

1 2 3 4 5 6 7 8 9 10 |

#Base-Image used for the Container-Image FROM rockylinux:9.3 #We install the epel-repo and the dumb-init and procps packages. We also update all RUN dnf -y install --nodocs https://dl.fedoraproject.org/pub/epel/epel-release-latest-9.noarch.rpm vim && dnf -y update && dnf -y install dumb-init procps && dnf -y clean all # This copies our shell script into our image in the specified path COPY hello_world.sh /hello_world.sh #As an entry point, we start dumb-init as a process within the container with PID 1. ENTRYPOINT ["/usr/bin/dumb-init", "--"] #CMD then executes our shell script in the context of Dumb-init CMD ["/hello_world.sh"] |

We use dumb-init as ENTRYPOINT to execute a process with PID 1 in the container. This is important to act as an init process that handles signals correctly and avoids zombie processes. Even though it is not mandatory in our simple example, we recommend checking out this article by Laurenz Albe, which explains the importance of an init process for PostgreSQL containers.

Finally, we now want to build and launch the container.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# We use this to build the container. Docker must be able to find the Dockerfile and uses # the current path of the user as the source for relative links. docker build . ... => => writing image sha256:a9d4b72b0d69784b0ef3cd06f2fe16b2ba7ad3eac6222 # We can now start the container docker run sha256:a9d4b72b0d69784b0ef3cd06f2fe16b2ba7ad3eac6222 > Hello World # We can overwrite the CMD command when starting the container and simply start it # with the bash in the container, for example. docker run -it sha256:a9d4b72b0d69784b0ef3cd06f2fe16b2ba7ad3eac6222 /bin/bash # -i keeps an STDIN open and -t allocates a pseudo-TTY > [root@24c237d9bf8b /]# ps aux USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 1 2.0 0.0 2500 1024 ? Ss 18:14 0:00 /usr/bin/dumb-init -- /bin/bash root 7 0.0 0.0 4840 3712 pts/0 Ss 18:14 0:00 /bin/bash root 21 0.0 0.0 7536 3200 pts/0 R+ 18:14 0:00 ps aux |

The next post in the series will focus more on the topic of container construction. We will build our first PostgreSQL container and look at various concepts for the structure of Dockerfiles.

In order to receive regular updates on important changes in PostgreSQL, subscribe to our newsletter, or follow us on Facebook or LinkedIn.

Can You give me a reason, why not just use normal (apt install) Postgres in my old PC, now running Lubuntu?

I can run several engines there, if needed. But I rather buy another old PC (25€) and I allways have 100% RAM bus speed, 100% network speed and 100% isolation between engines.

Only reason to use 2 engines is testing new Postgres version. I have now 16 and 17rc. Why run several copies of the same? Docker has some advantage there, saving RAM. I think 2 separate PC is better allways, if several engines are needed (i.e prod/test)